Audiobus: Use your music apps together.

What is Audiobus? — Audiobus is an award-winning music app for iPhone and iPad which lets you use your other music apps together. Chain effects on your favourite synth, run the output of apps or Audio Units into an app like GarageBand or Loopy, or select a different audio interface output for each app. Route MIDI between apps — drive a synth from a MIDI sequencer, or add an arpeggiator to your MIDI keyboard — or sync with your external MIDI gear. And control your entire setup from a MIDI controller.

Download on the App StoreAudiobus is the app that makes the rest of your setup better.

Comments

Yeah I’m going to experiment with it and see if it’ll understand the modifications you have to make for VS. Ugh yeah I don’t remember much from GLSL from when this app first came out but it’s so freaking dense to drudge through and I’ll gladly take shortcuts

Thanks; great video btw

Speaking of which

This was my try

This videos are both simple and great!

Since we're talking about creating shaders…

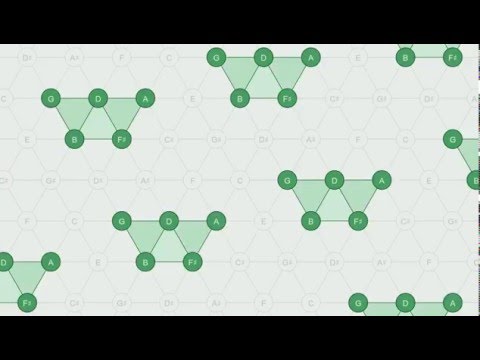

Just hit me (probably not for the first time) that I could create a simple one which would help in visualizing musical notes on a playing surface (say, a grid of pads organized in chromatic rows a fourth apart, as the strings on a bass or the pads on a Lightpad, Push, Launchpad, LinnStrument, etc.). Chances are that such a grid would be really easy to create and sending a value to position some marker shouldn't be too hard either.

Something similar would happen with hex keys, like on the Exquis or Tonnetz.

I mean… with a bit of chatbot help, I’m pretty sure we can come up with both fun and useful things.

While we're at it… How about NDI? VS outputs to NDI (since September 2021).

If I get this right, it means that we could bring those video (or audio+video!) feeds from an iPad to something else. That could be quite useful!

Now, the trick is to find some tools which support NDI input. Doesn't sound like TouchViZ does (which could make for something fun, merging feeds from two VS instances).

Yes, VS supports NDI and it is was fixed for iOS on the latest version 1.5.0

Thanks. The software is actually great

That’s a great idea - asking AI to create shaders for VS. I tried it yesterday but the resulting code wasn’t right unfortunately and didn’t work.

@sinosoidal, are there specific technical instructions/requirements which could be given to enable AI to create usable shader code for VS?

Being able to create user shaders would really take the app to the next level for those of us who aren’t able to code them ourselves.

Watching this thread for the answer WITH MUCH EXCITEMENT 👀

We haven't tried that ourselves extensively. Anyway, the shader will always need some kind of manual of adjustment in order to be usable in VS. Has anyone seen this video?

Thanks @sinosoidal. Yes, i watched that video last year but not having any coding experience whatsoever it was still beyond me. I’ll watch it again now though to see if it helps me identify what is wrong with the generated code.

You say ‘the shader will always need some kind of manual adjustment in order to be usable in VS’, well, that’s what i was asking about really: is it possible to say what exactly is required to make it usable in VS? Is that covered in the video? If not, could you elaborate…in terms Google Gemini could understand? Cheers.

[…]

[…]

Similar situation for me. I do some coding (even taught people how to use some coding languages). Still gave up while watching the video, the first time.

I'd say so. Just not that directly. It's bit by bit, trial and error.

Not sure a chatbot could understand those differences.

So, one is about some default variables. Maybe the 'bot could get that, if it's ingested the explanation. In my (limited) experience with OpenAI's code generation algorithm, this is the kind of thing that is often missed. Had to “argue” for a long time to get ChatGPT to produce something useful in such a context (in p5js, which is rather well documented).

Then, there's the matter of adding parameters to replace some of the things which are set as constants in a piece of shader code. We could teach the 'bot about this through prompts, giving examples.

Otherwise, it's a matter of doing a fair bit of debugging.

Chances are, spending some time with Gemini to learn how to build shaders will get you to develop some coding skills which you'll be able to reuse elsewhere. While the VS-specific stuff won't carry over to other contexts, it could give you a space for experimentation.

Eventually, it's about building prompts which describe what you want. And that might be challenging until we understand GLSL.

(I might take that up as a little project myself, even though I'm not that clear on what I want, visually. Apart from ideas about displaying notes on a grid…)

You need to watch the video carefully. Shader programming is really an hard concept. A shader is a program that run on a GPU and allows each pixel color to be calculated on a different GPU core, in parallel. That concept alone is mind blowing.

The shaders you usually see around paint the whole image with a color. In VS we tell the shader to become transparent in the absence of color in order to blend with the layers below. There are also some transformations in order the shader to display correctly. So it is not just a matter of copy paste. But once you get the basics, the transformation can be quite easy.

I part from a very easy example in the video. I believe that a little trial and error and a little patient can pay out.

Starting from a very easy example is useful advice, for “computational thinking”. (“Break down any problem in simpler questions.”)

Thing is, it might get weird with a chatbot, which requires as much context to do a simple thing than a very difficult one.

Still, I'm willing to try. Probably following the tutorial to the letter, typing the exact code to draw a circle and change its colour, etc. Then, I might ask a 'bot to change that bit of code to… a square or a grid or a string…

Maybe even tonight.

@Enkerli, thanks for the advice, really appreciate it. Please keep us all posted if you make any progress.

@sinosoidal, great, thank you - i will indeed watch the video again very closely to see if i can get anywhere. Cheers.

Feed it some code from a frag file and explain to it the difference between the VS format vs GLSL. You’ll get the results you need if you stick with it, I’ve been having a lot of success. Watch that video to really grasp the differences and understand it. Maybe convert one yourself and then when you are using ChatGPT, you’ll see the errors it made and can correct them. Know the logic well and then you won’t have to worry about the maths, I’m having it create ones for me just from me explaining to it what I want. It requires a little back and forth but I usually get the required code by the third response without having to even edit it. Explain to it to use texCoord, let it know VS already declares “time” and doesn’t need to be stated, etc.

I want to like this app. Don't get me wrong. I love Imaginado apps, esp. FRMS. it's just that on my 2019 iPad Pro, the processor struggles to keep up with VS, which is frustrating. also, as a person with a background in visual art, including digital art, I am confused by the interface. Secondarily, the VS version I bought for the Mac no longer works. Logic Pro has decided it can no longer validate the component. I kinda gave up on it after that.

Oh? Is this recent? Is this on an Apple Silicon Mac? Did you try checking the box to use VS anyway?

(It validates properly on my M1 MacBook Air, both in Logic Pro and Bitwig Studio. And performance is very good, including through NDI or Syphon (on the local machine). Not great from my iPad Pro to my Mac.)

Too bad about the performance issue. As @sinosoidal said, using GPUs can get tricky (as you've probably experienced, given your background). Wonder if there's a way to decrease the load, at the expense of framerate…

As for the interface, we're agreed. There's an internal logic to the whole thing… which requires some work to figure out.

There are many audio plugins like that, as well. The learning curve is such that you end up investing more in the plugin than in your own work. In the end, the reward can be high. Just not that transferable.

Personally, I found MIDI-based presets more useful, for my learning experience. Including some from the expansion packs.

A full tutorial, step-by-step, would help a lot. Start really simple. Get a track to trigger the display of a static image (either through envelope following or through MIDI; ideally both). No LFO or anything else. Music has to play for the shape to come up. That's one layer, turned on by default.

Then, add other layers.

Maybe give people a well-defined loop, with separate spectral bands coming up at different times, and get people to understand how the four bands can trigger different things.

After that, increase the complexity by playing with more parameters… while still focusing on effects which are really easy to notice.

Eventually, you can get into the nitty-gritty of using different channels (which is often tricky, depending on the DAW; Logic Pro doesn't make routing easy, Bitwig Studio does).

If such a tutorial is done through video, better make sure it's structured really well, with clear chapter markers and, in fact, separate videos for different parts of the process. Give opportunities for people to try things on their own. You can complement with reading material without relying on people reading anything to have fun.

(I realize that none of this may be relevant to you, @day_empire. I mostly mean it as a Learning Experience Designer.)

Right. Sounds easy, when you put it this way. Thing is, one requires prior knowledge of these differences to make it work.

From the video, it does sound like using

texCoordand the other “pre-declared environment variables” is key. Plus the manifest section. Plus the fact that VS isn't really wrapping objects with those shaders, I guess?Of course, if you have examples you could share from your own VS materials, that could be really useful!

(If Imaginando Lda had public repositories for those or otherwise fed a dataset with some of them, that could be very effective. Counterintuitively, perhaps, it would likely have a positive effect on sales of PerpleXon's packs as people would feel like they could understand what's going on.)

Brilliant to hear you’ve got it working and many thanks for the really useful advice. I’ll definitely be following it. Cheers.

So, still thinking about the learning experience… here’s the kind of thing I have in mind (having had a great experience with p5js).

https://p5js.org/learn/getting-started-in-webgl-shaders.html

Check my edit, I think you responded to my first part before I realized I was being too vague. Also here is a dithering one I had it make from description a description and a little back and forth to get it to correct a couple things. I have an extra speed parameter in there simply because I can’t quite remember how to communicate that with VS, so that’s an error due to me and not ChatGPT.

Nice!!

Yeah, that makes a whole difference, stated like this.

(To be clear, I wasn’t blaming you for the explanation. Just trying to set expectations, especially in a context of noncoders.)

Awesome! I’ll try that. Thanks a whole lot.

(My plan, at this point, is to get better acquainted with shaders through that p5js toot and Shadertoy. Then, I’ll try your dithering example in VS. Afterwards, I’ll prompt a chatbot specifying a few things about pre-declared environment variables. I’ll also feed it the plain GLSL code and VS version from the video. If all of that works as planned, I’ll ask for a way to position a dot in a grid based on MIDI note number. If that works polyphonically, I could use VS to create learning material.)

@Enkerli good luck! Yeah I’ve been keeping it pretty simplified so far and working my way up to getting it more complex. It definitely needs some guiding so I’m trying to optimize how to word it for quicker results. Yeah Shadertoy has been a big help for me for referencing as well as the KodeLife app. I will say, at least with ChatGPT, that it was pretty familiar with GLSL so a lot of what I needed to do was just steer it towards getting it right for VS after feeding it a few examples and correcting a few things it kept wanting to do in GLSL through some explaining.

I’m very excited about the results though, it really opens up a lot of opportunities because learning the DSP side is just a lot to undertake so the AI help is really filling in the gap. Although it could be doing some of that wrong and would pass right by me unnoticed. But I’m getting mostly what im expecting from the output. I’ll keep up with sharing my results as they evolve and try to share what has helped steer the AI in the right direction

@Fingolfinzz Does sound like you're having a nice experience. Feeding examples probably helps a lot.

I've used the Shadertoy example from the second part of the video and the VS version. At first, it kept wanting to add uniforms. Then, it behaved better. I was able to create an 8x8 grid and I can highlight some cells based on incoming data from KBD… the highlights are way off, though. And it sounds like it's only using one note, which isn't what I want. (I'm pretty sure VS can use data from more than one note in a given MIDI channel.)

Soooo… It's a start.

There have been some weird things. For instance, GLSL doesn't operate modulo on ints. And it doesn't work with strings. Sounds like it's pretty hard to get it to display any text, actually. Guess I should just use a static image with note names.

In my case, the main thing I'm trying to do (displaying incoming note information) could work well in a very simple MIDI plugin instead of VS. My main issue has been getting MIDI messages into a plugin (tried creating AUv3 plugins using Audiokit; not too hard except for getting MIDI in…).

At any rate, that's my weird little project. Just thought I'd try VS for that.

This little experiment does teach me quite a bit about different things. I'm still inspired to create something with VS that I can use in performance. (I typically want to display something about the melodic or harmonic content of what I play.)

There isn't a public repository but everyone is free to share their custom shaders here: https://patchstorage.com/platform/vs-visual-synthesizer/

Oh, I discovered that if I give the AI the text from the “Material” section from the manual https://www.imaginando.pt/products/vs-visual-synthesizer/help/layer-manager, it helps with it to understand the declared variables and have a little more insight to how to construct the shaders for VS.

Great tip, thanks.

Thanks. Killed it and reinstalled. Works now. New problem. VS Mac will not find video or image files for the background. Its file system is broken. No way to add my own content. Too much trouble. Sorta not worth the trouble.

I find the file loading system to be buggy too on Mac but it does work if you load a folder, exit, then go back again — also it won’t let you load from iCloud Drive which is a pain.